Abstract

The human voice conveys unique characteristics of an individual, making voice biometrics a key technology for verifying identities in various industries. Despite the impressive progress of speaker recognition systems in terms of accuracy, a number of ethical and legal concerns has been raised, specifically relating to the fairness of such systems. In this paper, we aim to explore the disparity in performance achieved by state-of-the-art deep speaker recognition systems, when different groups of individuals characterized by a common sensitive attribute (e.g., gender) are considered. In order to mitigate the unfairness we uncovered by means of an exploratory study, we investigate whether balancing the representation of the different groups of individuals in the training set can lead to a more equal treatment of these demographic groups. Experiments on two state-of-the-art neural architectures and a large-scale public dataset show that models trained with demographically-balanced training sets exhibit a fairer behavior on different groups, while still being accurate. Our study is expected to provide a solid basis for instilling beyond-accuracy objectives (e.g., fairness) in speaker recognition.

Motivation

Voice biometrics are widely used for identity verification, but concerns have emerged about the fairness of these systems. This work explores whether state-of-the-art deep speaker recognition models systematically expose unfair decisions across demographic groups, and how to mitigate such unfairness.

Research Questions

- RQ1: Do speaker recognition models exhibit disparate error rates across demographic groups?

- RQ2: Can balancing the training data reduce unfairness?

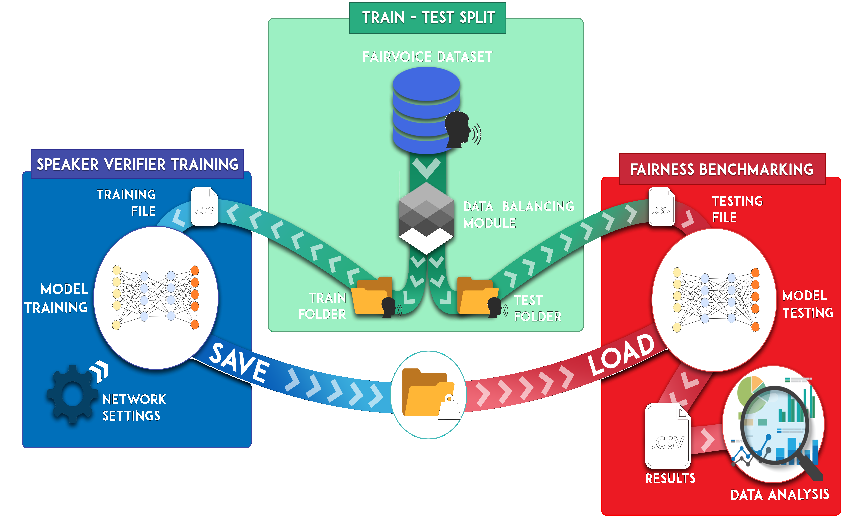

Methodology

- Models: X-Vector, Thin-ResNet

- Dataset: Large-scale public dataset with gender and age groups

- Metrics: Equal Error Rate (EER), False Acceptance Rate (FAR), False Rejection Rate (FRR)

- Training: Unbalanced vs. demographically balanced data

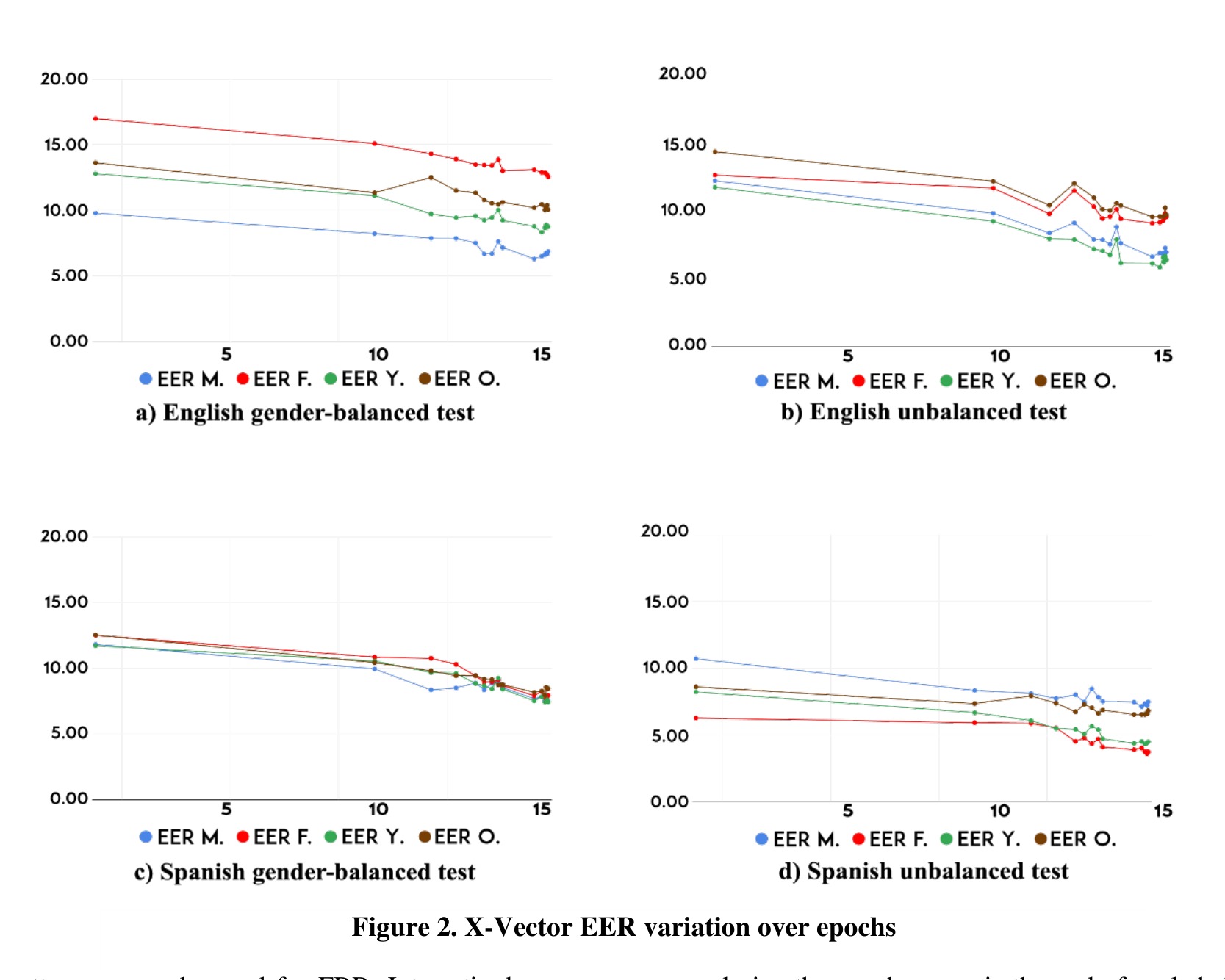

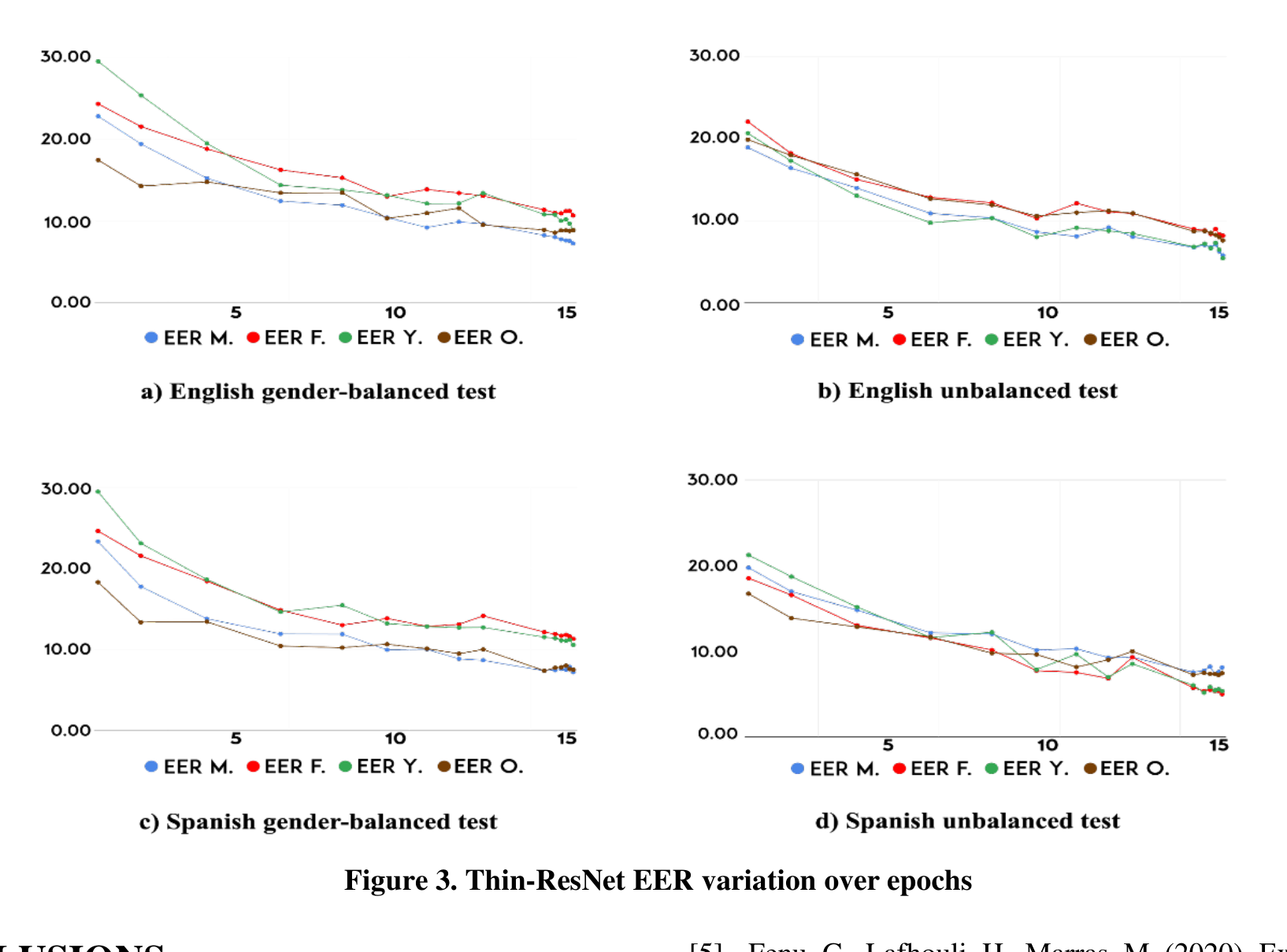

Key Results

Main Findings

- Balanced data helps: Larger and more demographically balanced datasets decrease disparity in EER among protected groups.

- FAR/FRR disparity reduced: Balancing the training reduces differences in FARs and FRRs among groups.

- Model choice matters: Thin-ResNet leads to lower disparity and is less sensitive to balance changes than X-Vector.

- Language effects: English models tend to systematically discriminate certain groups, while Spanish models show spurious disparities.

BibTeX

@inproceedings{fenu2020improving,

author = {Fenu, Gianni and Medda, Giacomo and Marras, Mirko and Meloni, Giacomo},

title = {Improving Fairness in Speaker Recognition},

booktitle = {Proceedings of the 2020 European Symposium on Software Engineering},

pages = {129--136},

year = {2020},

doi = {10.1145/3393822.3432325}

}