Abstract

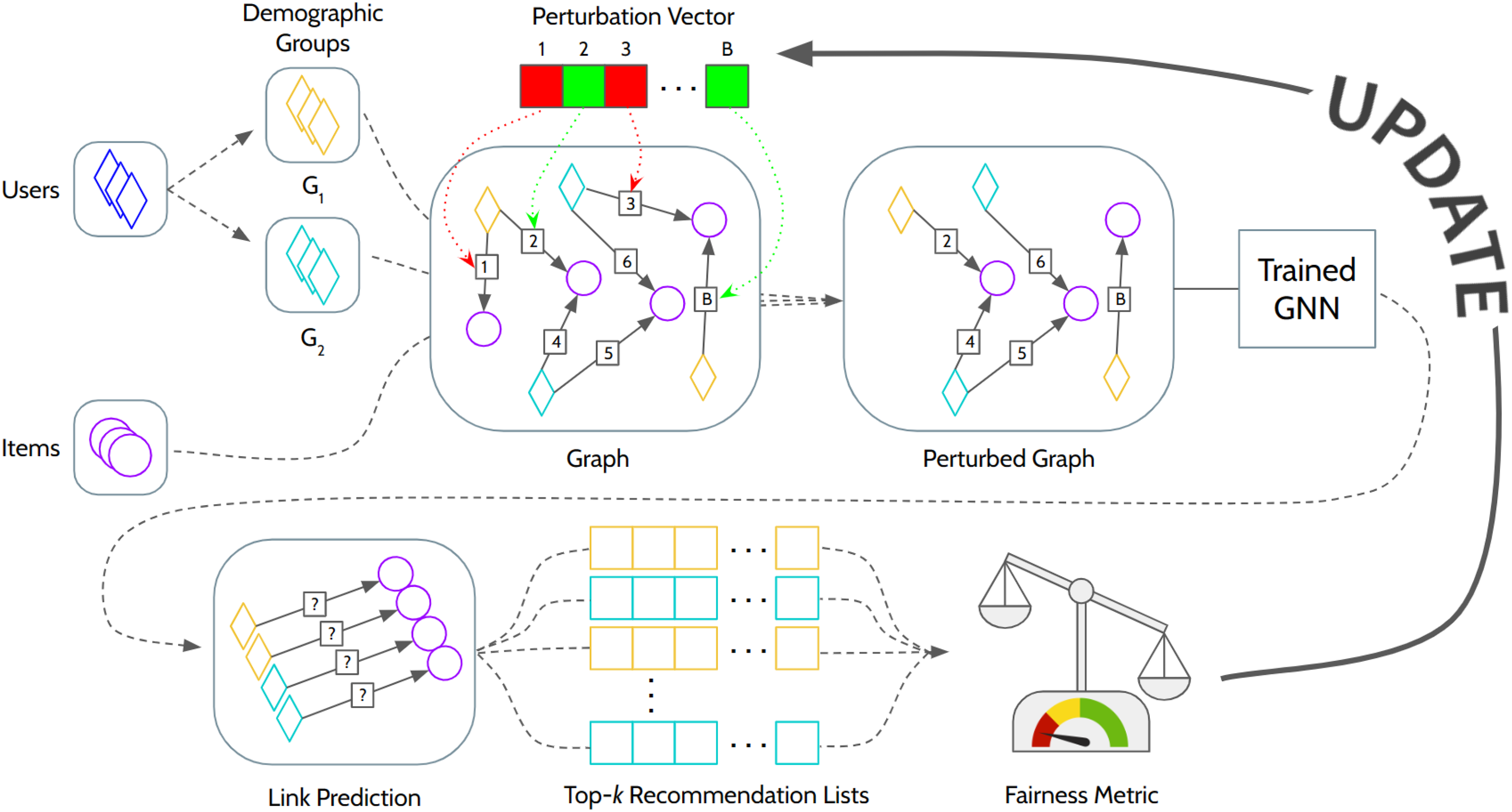

Nowadays, research into personalization has been focusing on explainability and fairness. Several approaches proposed in recent works are able to explain individual recommendations in a post-hoc manner or by explanation paths. However, explainability techniques applied to unfairness in recommendation have been limited to finding user/item features mostly related to biased recommendations. In this article, we devised a novel algorithm that leverages counterfactuality methods to discover user unfairness explanations in the form of user-item interactions. In our counterfactual framework, interactions are represented as edges in a bipartite graph, with users and items as nodes. Our bipartite graph explainer perturbs the topological structure to find an altered version that minimizes the disparity in utility between the protected and unprotected demographic groups. Experiments on four real-world graphs coming from various domains showed that our method can systematically explain user unfairness on three state-of-the-art GNN-based recommendation models. Moreover, an empirical evaluation of the perturbed network uncovered relevant patterns that justify the nature of the unfairness discovered by the generated explanations.

Motivation

Modern recommender systems have become highly effective but increasingly complex, raising concerns about trustworthiness, fairness, and explainability. While algorithmic fairness ensures equitable recommendations across demographic groups, understanding why a model is unfair remains a central yet under-explored challenge.

Key Insight: Existing approaches explain unfairness through user/item features, but these may not always be available. GNNUERS explains unfairness directly through user-item interactions — the fundamental data source for collaborative filtering models.

Method Overview

GNNUERS (GNN-based Unfairness Explainer in Recommender Systems) is a framework that:

- Represents interactions as a bipartite graph with users and items as nodes, and interactions as edges

- Perturbs the topological structure to identify edges (interactions) causing unfairness

- Minimizes demographic disparity while constraining the number of perturbed edges

Counterfactual Explanation

The core idea is counterfactual reasoning: we ask "What if certain users had not interacted with certain items?" By finding the minimal set of edges whose removal reduces unfairness, we explain which interactions caused the disparity.

Formal Definition: A counterfactual explanation $\tilde{E}$ is a subset of edges such that when removed from the adjacency matrix $A$, the resulting fairness metric $\Phi(f(\tilde{A})) < \Phi(f(A))$.

Loss Function

The optimization is guided by:

Where:

- $\mathcal{L}_{\text{fair}}$: Minimizes the demographic parity gap (NDCG disparity between groups)

- $\mathcal{L}_{\text{dist}}$: Constrains the number of perturbed edges

Key Contributions

- Novel Framework: First method to explain unfairness in GNN-based recommender systems via counterfactual edge perturbation

- Memory Efficient: Uses a perturbation vector instead of a matrix, storing values only for existing edges

- Gradient Deactivation: Focuses perturbation on the unprotected group to identify edges advantaging them

- Graph Property Analysis: Categorizes explanations through degree, density, and intra-group distance metrics

Experimental Setup

Datasets

| Dataset | Domain | Users | Items | Interactions |

|---|---|---|---|---|

| ML-1M | Movies | 6,040 | 3,706 | 1,000,209 |

| LFM-1K | Music | 268 | 51,609 | 200,586 |

| Ta Feng | Grocery | 25,741 | 23,643 | 708,919 |

| Insurance | Insurance | 346 | 20 | 1,879 |

GNN Models

- GCMC - Graph Convolutional Matrix Completion

- NGCF - Neural Graph Collaborative Filtering

- LightGCN - Simplified GCN for recommendation

Key Results

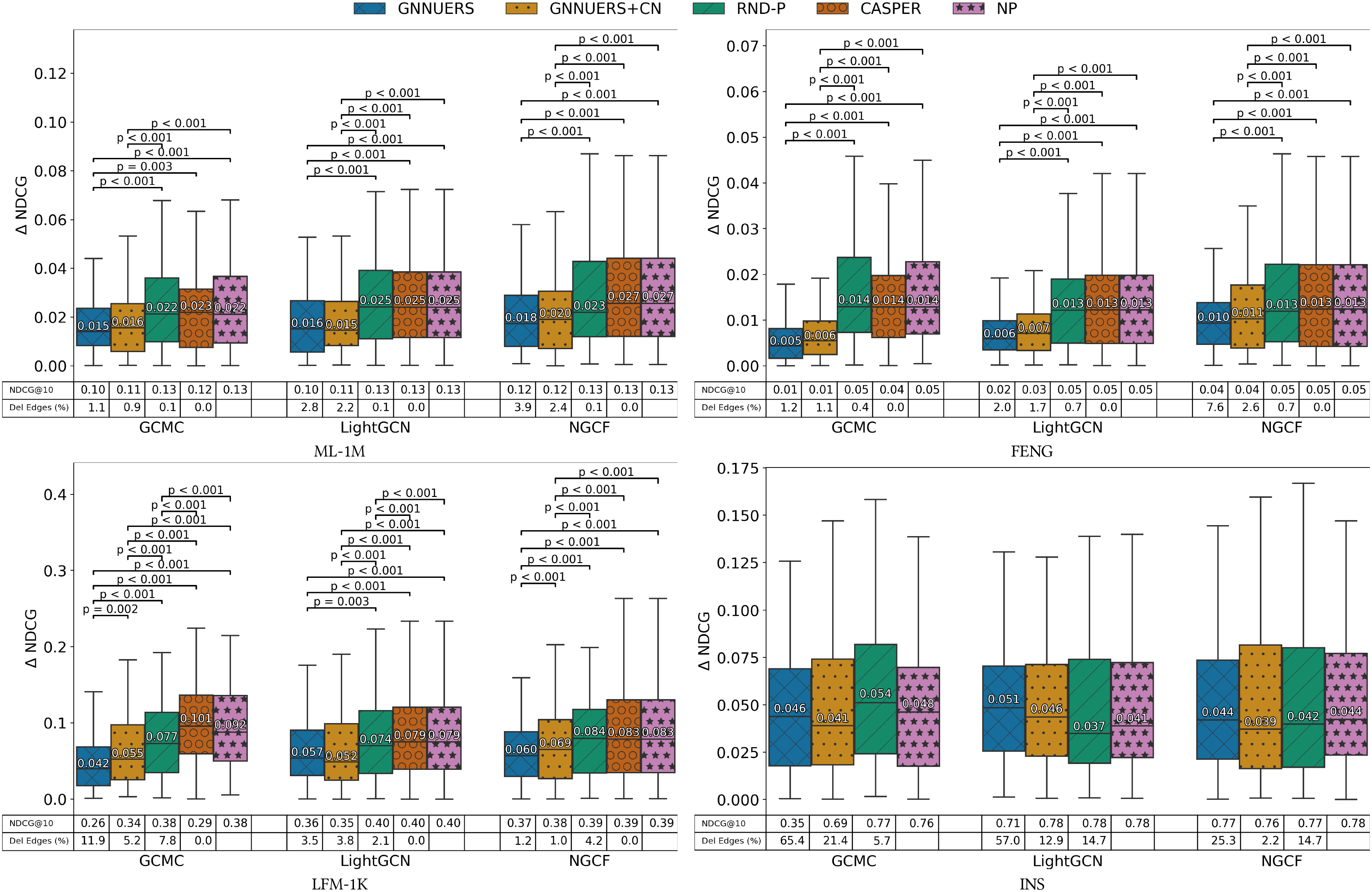

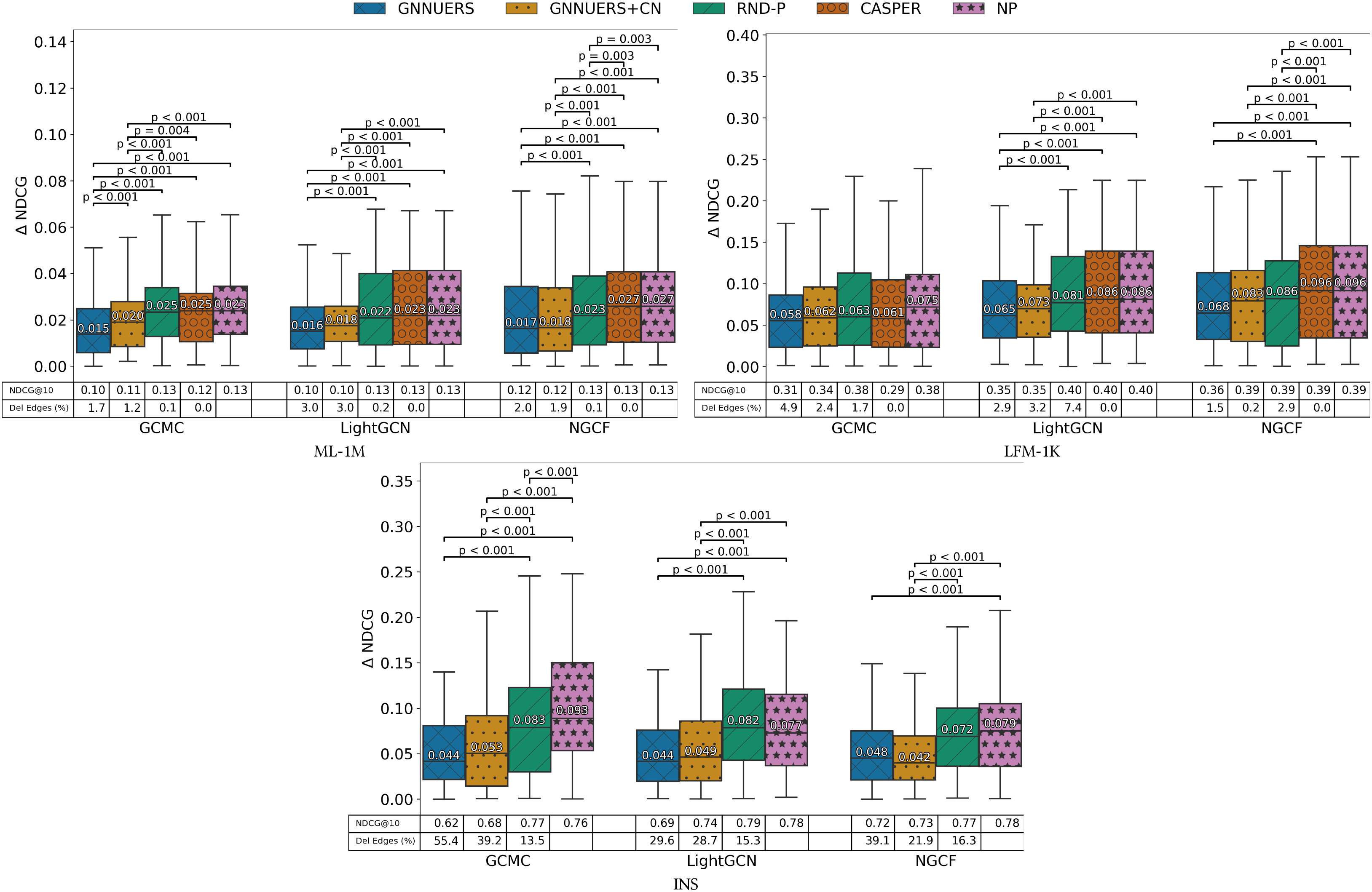

Unfairness Reduction

GNNUERS significantly reduces the NDCG disparity (ΔNDCG) between demographic groups:

- ML-1M & FENG: Achieves significant fairness improvement by perturbing only ~1% of edges

- Systematic explanations: Works across different models and sensitive attributes (age/gender)

- Minimal utility loss: Protected group utility is preserved while reducing unfairness

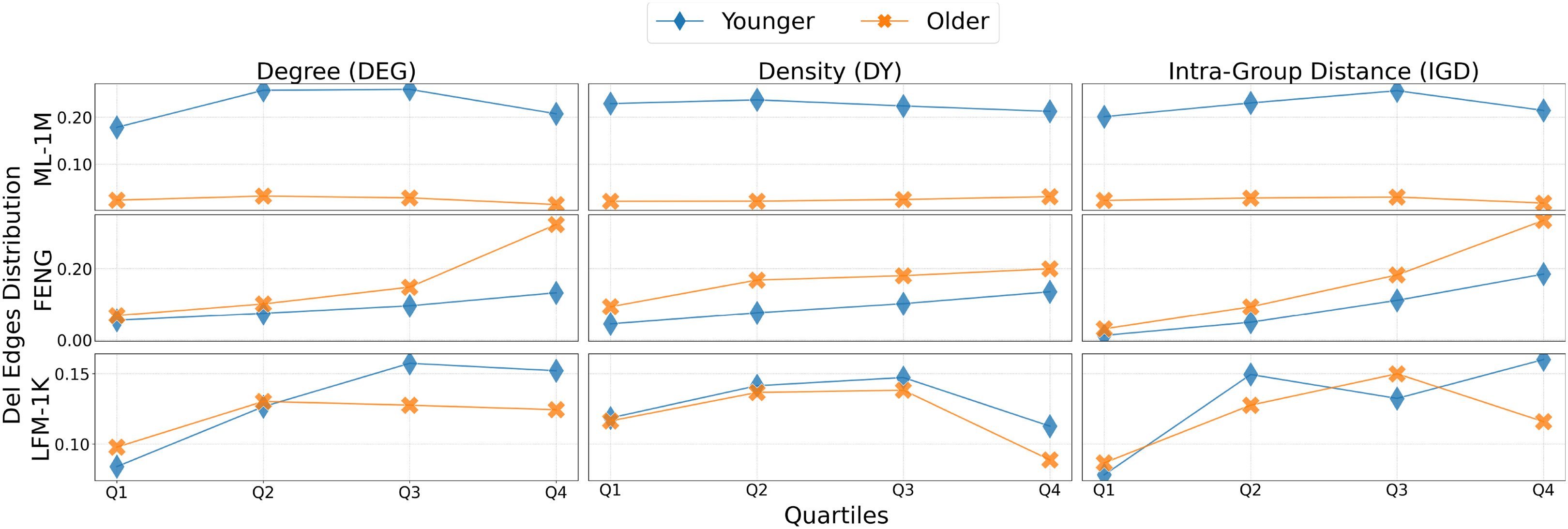

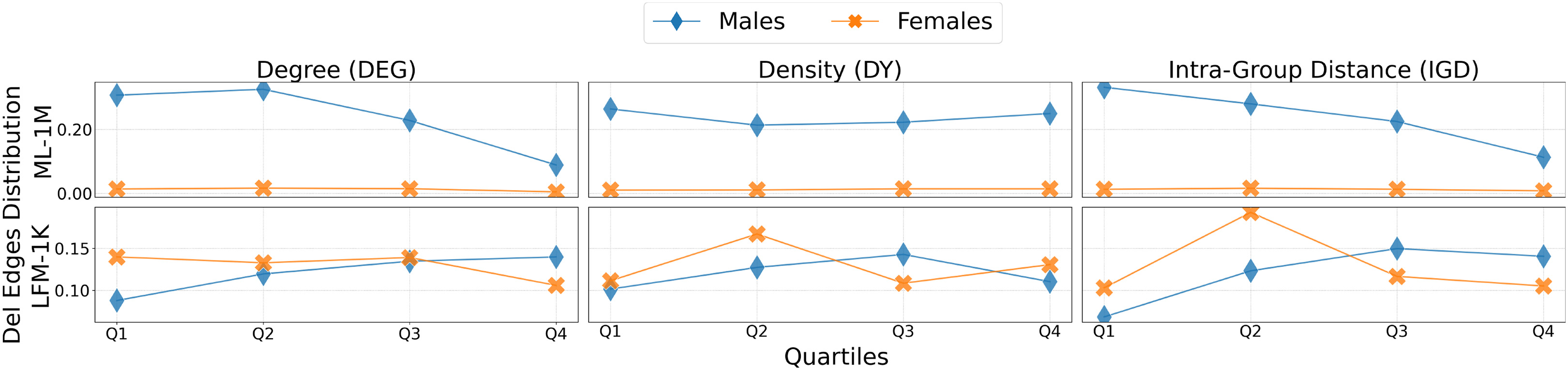

Pattern Discovery

Through graph property analysis, GNNUERS reveals:

- Over-representation effects: Unfairness often stems from larger/more active demographic groups

- Popularity bias connection: High-density users (consuming popular items) contribute to unfairness

- Isolation patterns: Low-IGD (isolated) users' interactions can significantly impact fairness

Experimental Results

Graph Properties for Explanation

We analyze perturbed edges through three topological properties:

| Property | Definition | Insight |

|---|---|---|

| Degree (DEG) | Number of edges per node | User activity / Item popularity |

| Density (DY) | Tendency to connect to high-degree nodes | Popularity bias |

| Intra-Group Distance (IGD) | Closeness to same-type nodes | Community structure |

Implications

GNNUERS enables system designers and service providers to:

- Understand unfairness sources at the interaction level

- Design informed mitigation strategies based on discovered patterns

- Audit recommendation systems for demographic disparities

- Balance fairness and utility with minimal perturbation

Resources

BibTeX

@article{medda2024gnnuers,

author = {Medda, Giacomo and Fabbri, Francesco and Marras, Mirko and Boratto, Ludovico and Fenu, Gianni},

title = {GNNUERS: Fairness Explanation in GNNs for Recommendation via Counterfactual Reasoning},

journal = {ACM Transactions on Intelligent Systems and Technology},

volume = {16},

number = {1},

articleno = {6},

year = {2024},

publisher = {ACM},

doi = {10.1145/3655631},

url = {https://doi.org/10.1145/3655631}

}