Abstract

Recent developments in recommendation have harnessed the collaborative power of graph neural networks (GNNs) in learning users’ preferences from user-item networks. Despite emerging regulations addressing fairness of automated systems, unfairness issues in graph collaborative filtering remain underexplored, especially from the consumer’s perspective. Despite numerous contributions on consumer unfairness, only a few of these works have delved into GNNs. A notable gap exists in the formalization of the latest mitigation algorithms, as well as in their effectiveness and reliability on cutting-edge models. This paper serves as a solid response to recent research highlighting unfairness issues in graph collaborative filtering by reproducing one of the latest mitigation methods. The reproduced technique adjusts the system fairness level by learning a fair graph augmentation. Under an experimental setup based on 11 GNNs, 5 non-GNN models, and 5 real-world networks across diverse domains, our investigation reveals that fair graph augmentation is consistently effective on high-utility models and large datasets. Experiments on the transferability of the fair augmented graph open new issues for future recommendation studies.

Motivation

Why study fairness-aware graph augmentation? Graph Neural Networks (GNNs) have become the state-of-the-art for collaborative filtering. However, unfairness issues in graph collaborative filtering remain underexplored, especially from the consumer's perspective. A notable gap exists in the formalization and evaluation of fairness mitigation algorithms on cutting-edge GNN models.

This paper serves as a solid response to recent research highlighting unfairness issues by:

- Reproducing a state-of-the-art fair graph augmentation method

- Evaluating across an extensive setup: 11 GNNs, 5 non-GNN models, 5 real-world networks

- Investigating the transferability of fair augmented graphs to new models

Approach Overview

The augmentation process modifies the user-item interaction graph to reduce unfairness disparities between demographic groups. Given a bipartite graph $G = (U, I, E)$ where $U$ is the user set, $I$ is the item set, and $E$ is the edge set representing interactions, the goal is to find an augmented graph $\tilde{G}$ that improves fairness while preserving recommendation quality:

$$\min_{\tilde{G}} \mathcal{L}_{unfair}(f(\tilde{G})) + \lambda \cdot \mathcal{L}_{utility}(f(\tilde{G}))$$

Experimental Setup

Datasets

| Dataset | Users | Items | Interactions | Domain |

|---|---|---|---|---|

| FNYC | 4,832 | 5,651 | 182,164 | POI (New York) |

| FTKY | 7,240 | 5,785 | 353,847 | POI (Tokyo) |

| LF1M | 4,546 | 12,492 | 1,082,132 | Music |

| ML1M | 6,040 | 3,706 | 1,000,209 | Movies |

| RENT | 5,050 | 3,397 | 43,770 | Fashion |

Models

We evaluate 11 GNN-based models and 5 non-GNN models:

- GNN Models: AutoCF, DirectAU, HMLET, LightGCN, NCL, NGCF, SGL, SVD-GCN, SVD-GCN-S, UltraGCN, XSimGCL

- Non-GNN Models: Traditional collaborative filtering baselines

Key Results

Pre-Analysis: Unfairness in Graph Collaborative Filtering

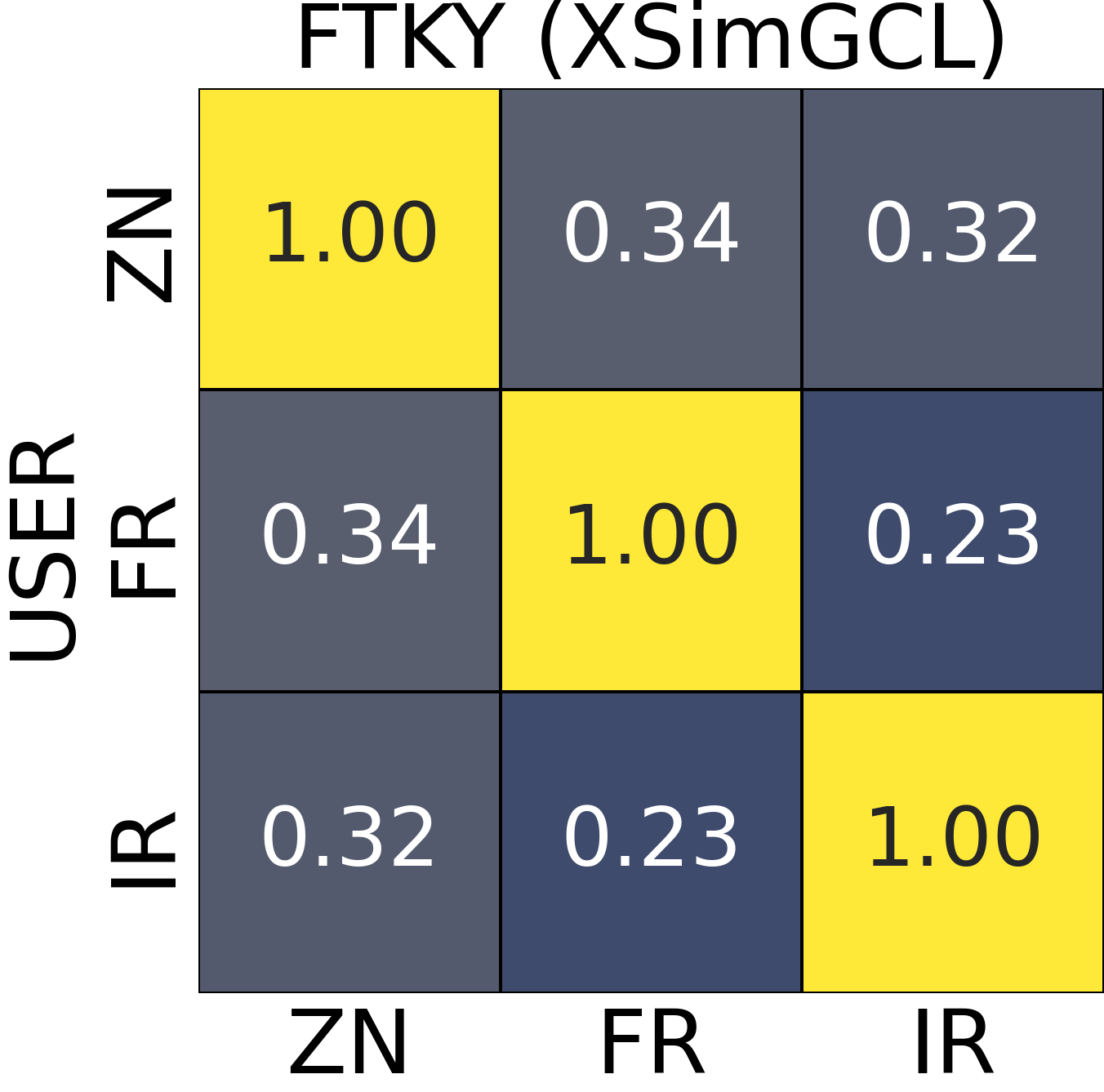

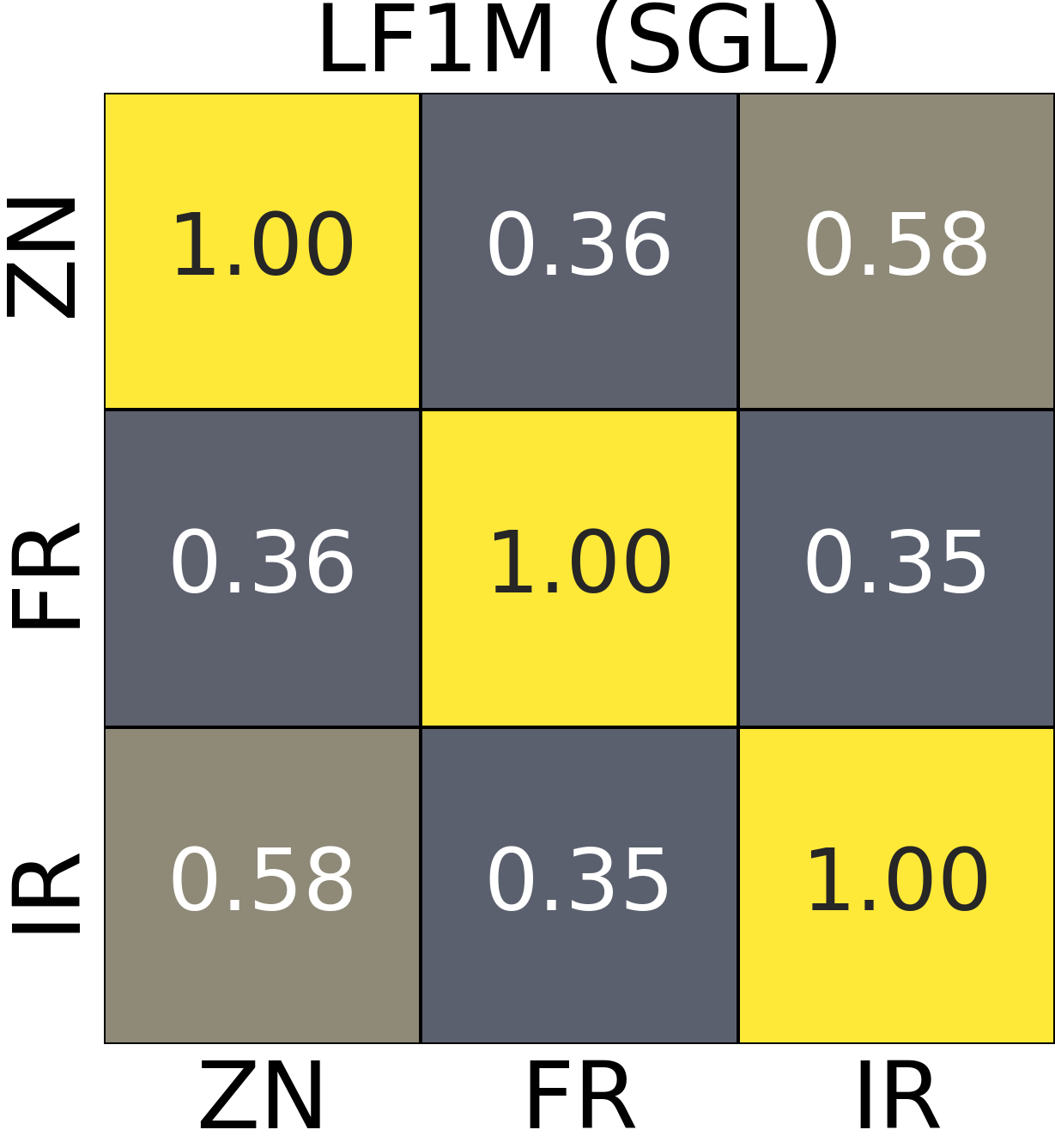

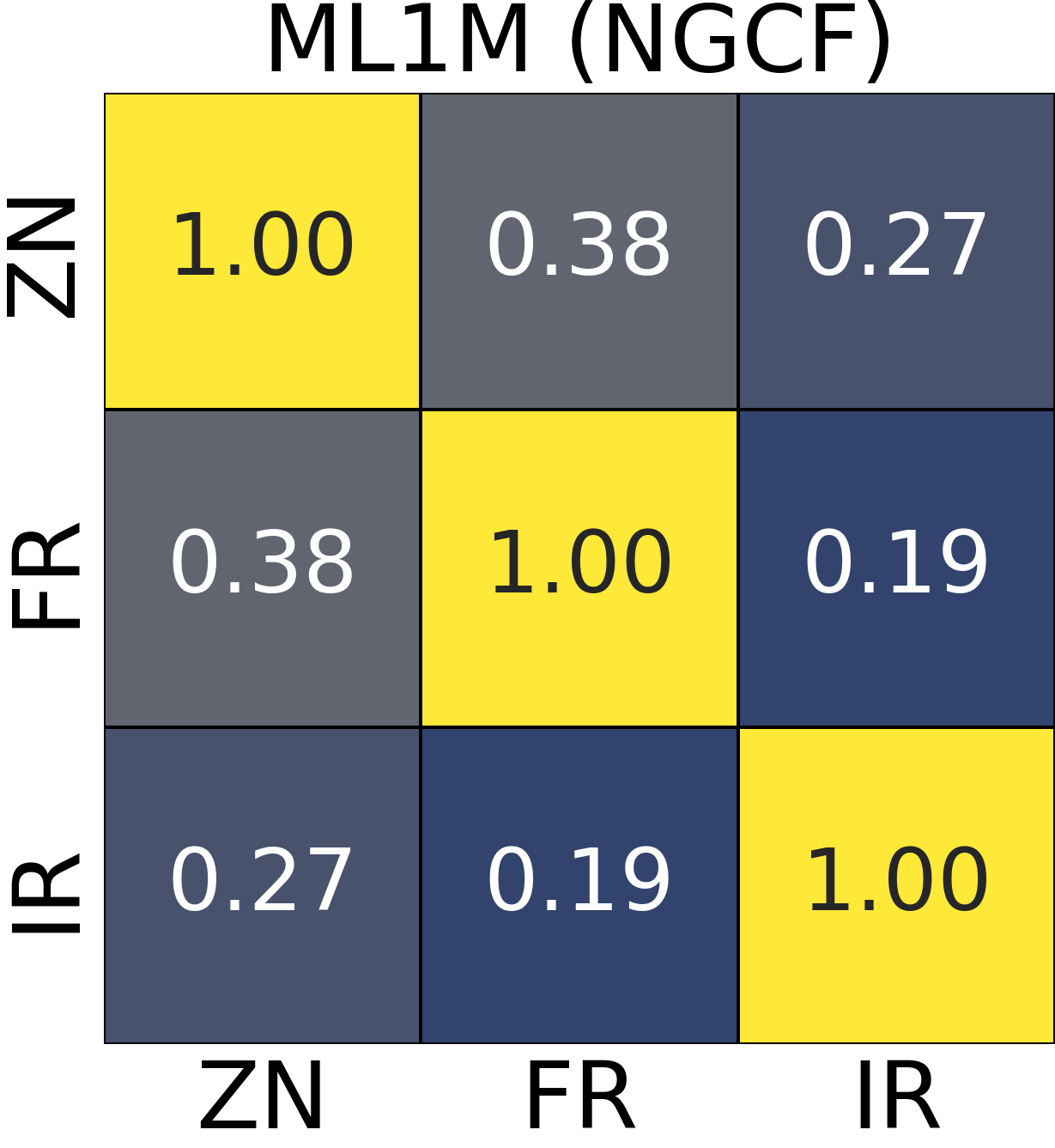

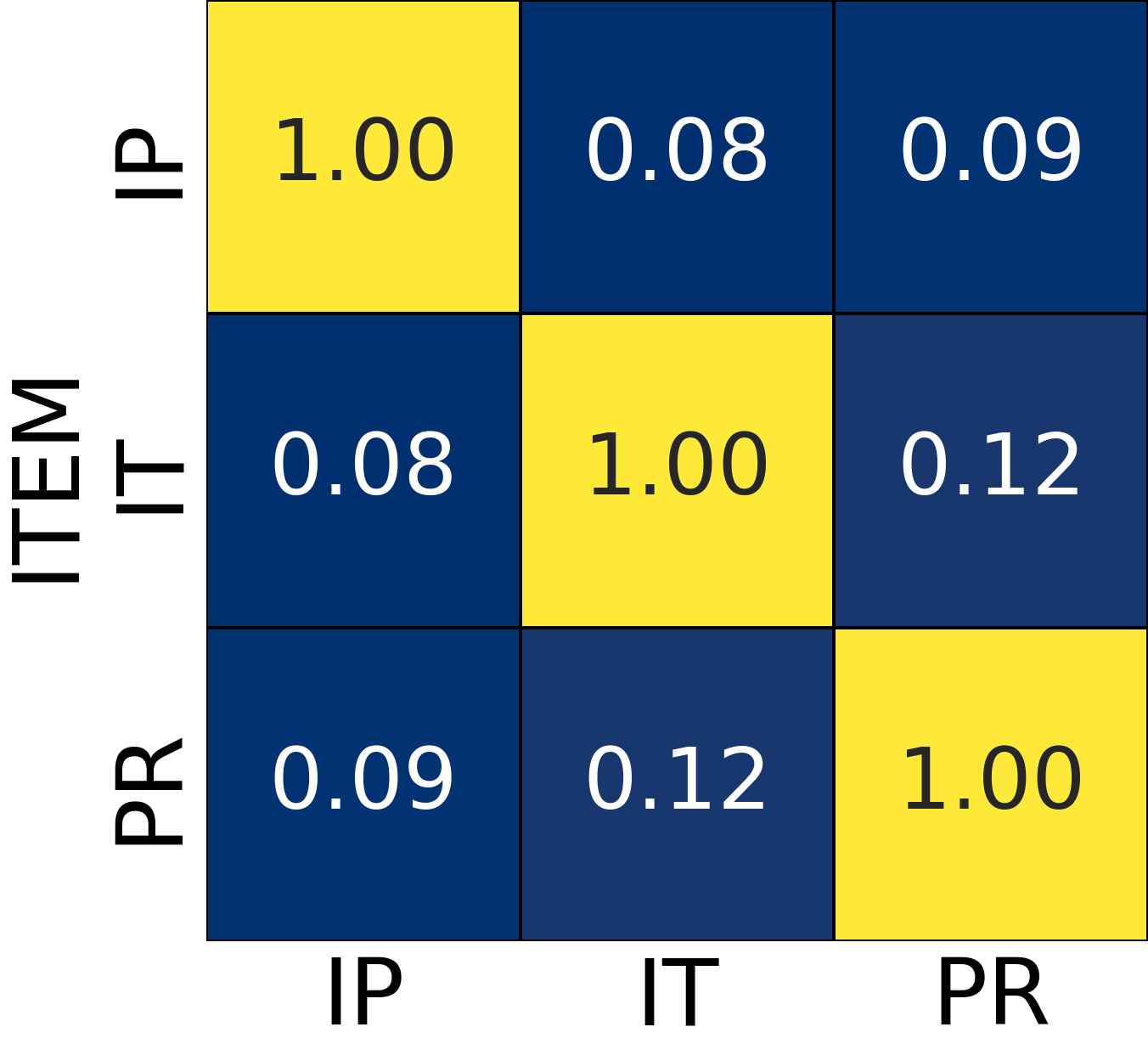

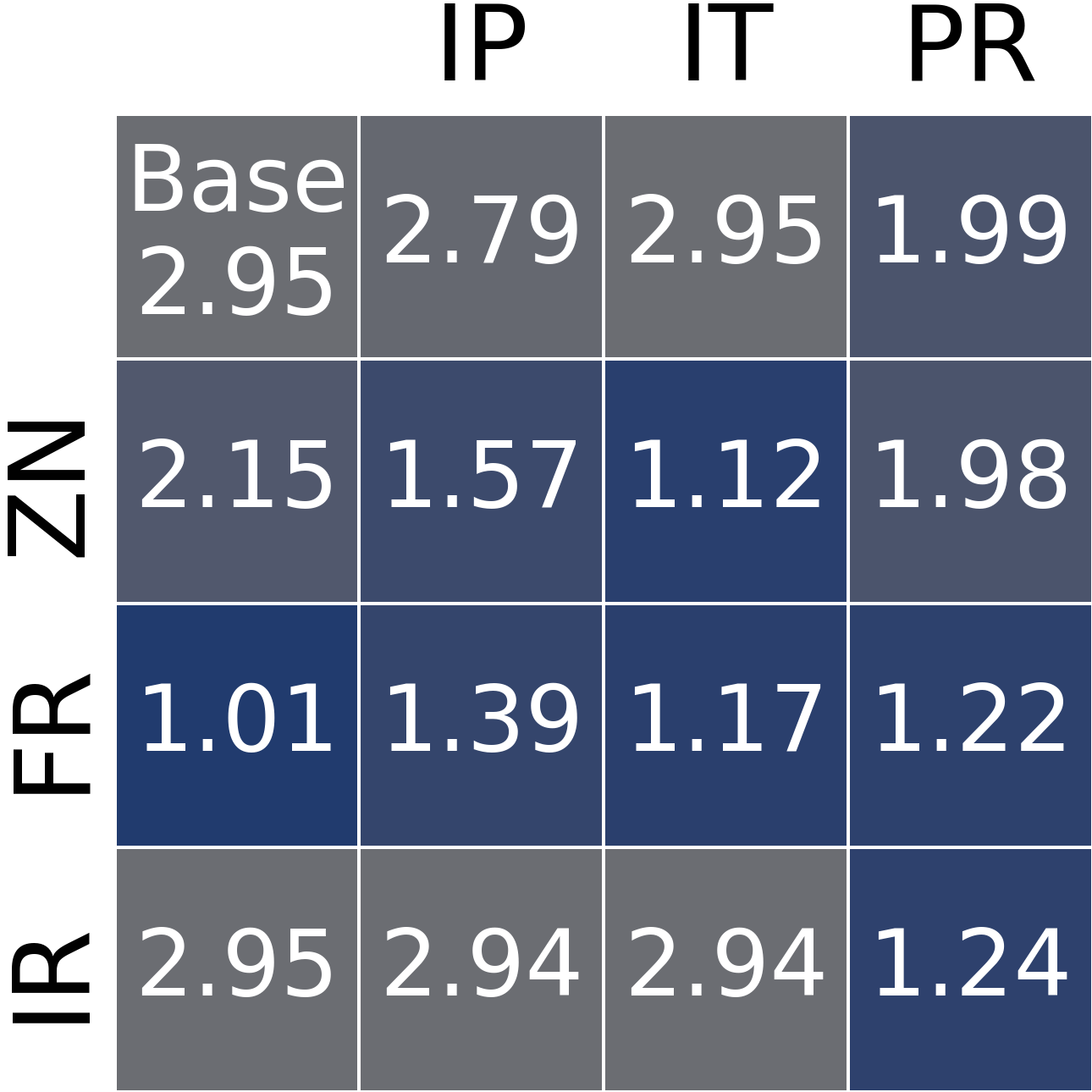

Before applying fairness-aware augmentation, we analyze the overlap between user and item sampling policies across datasets and models.

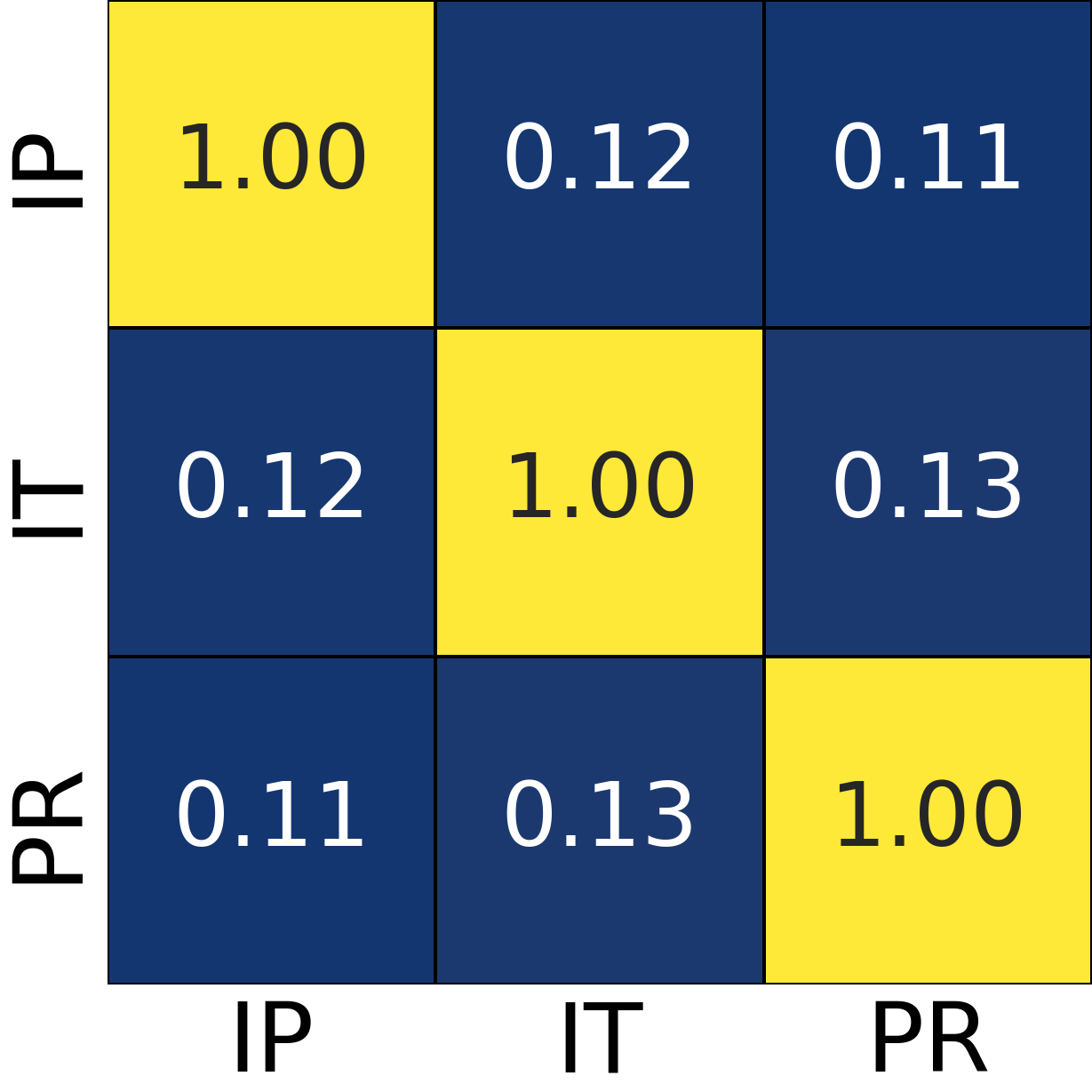

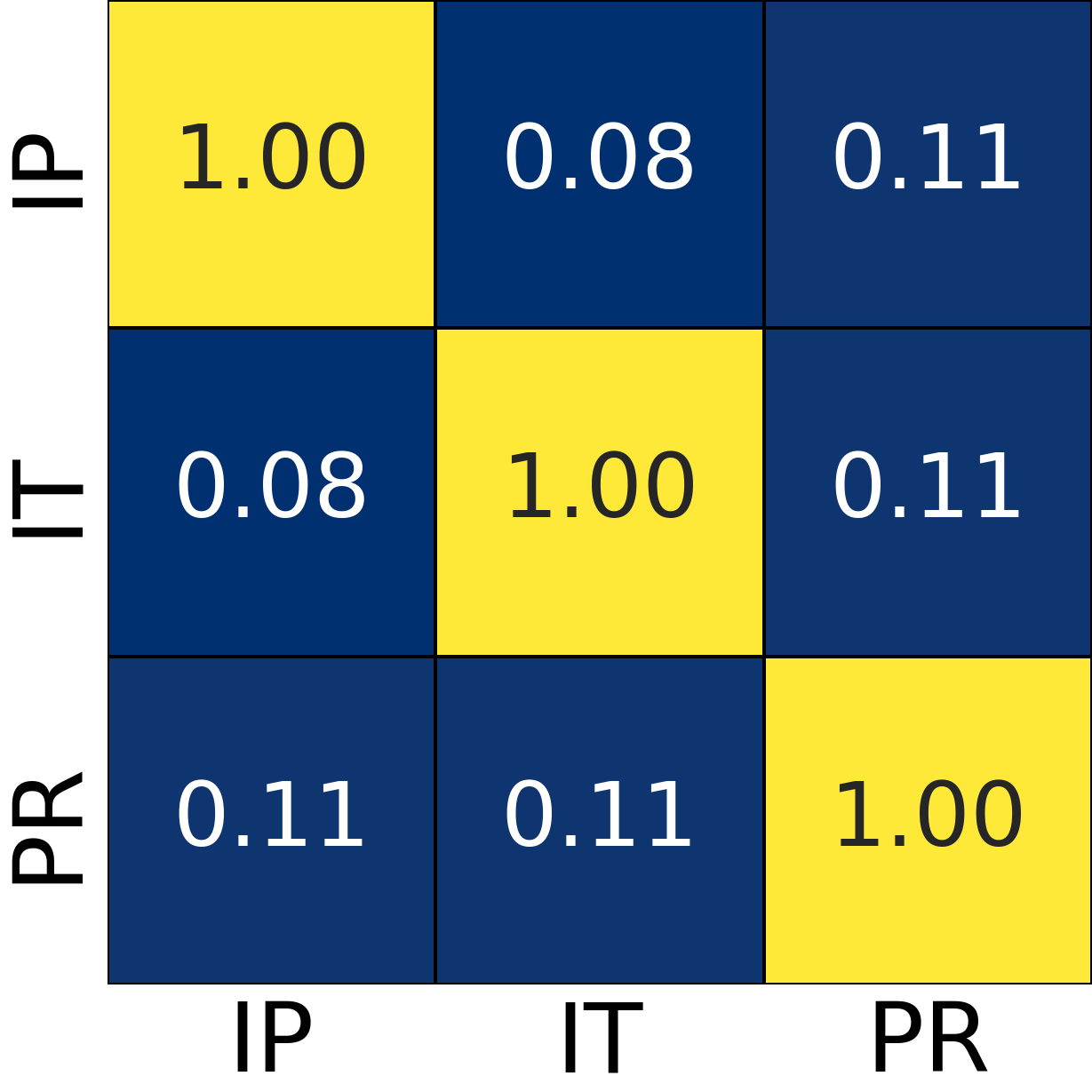

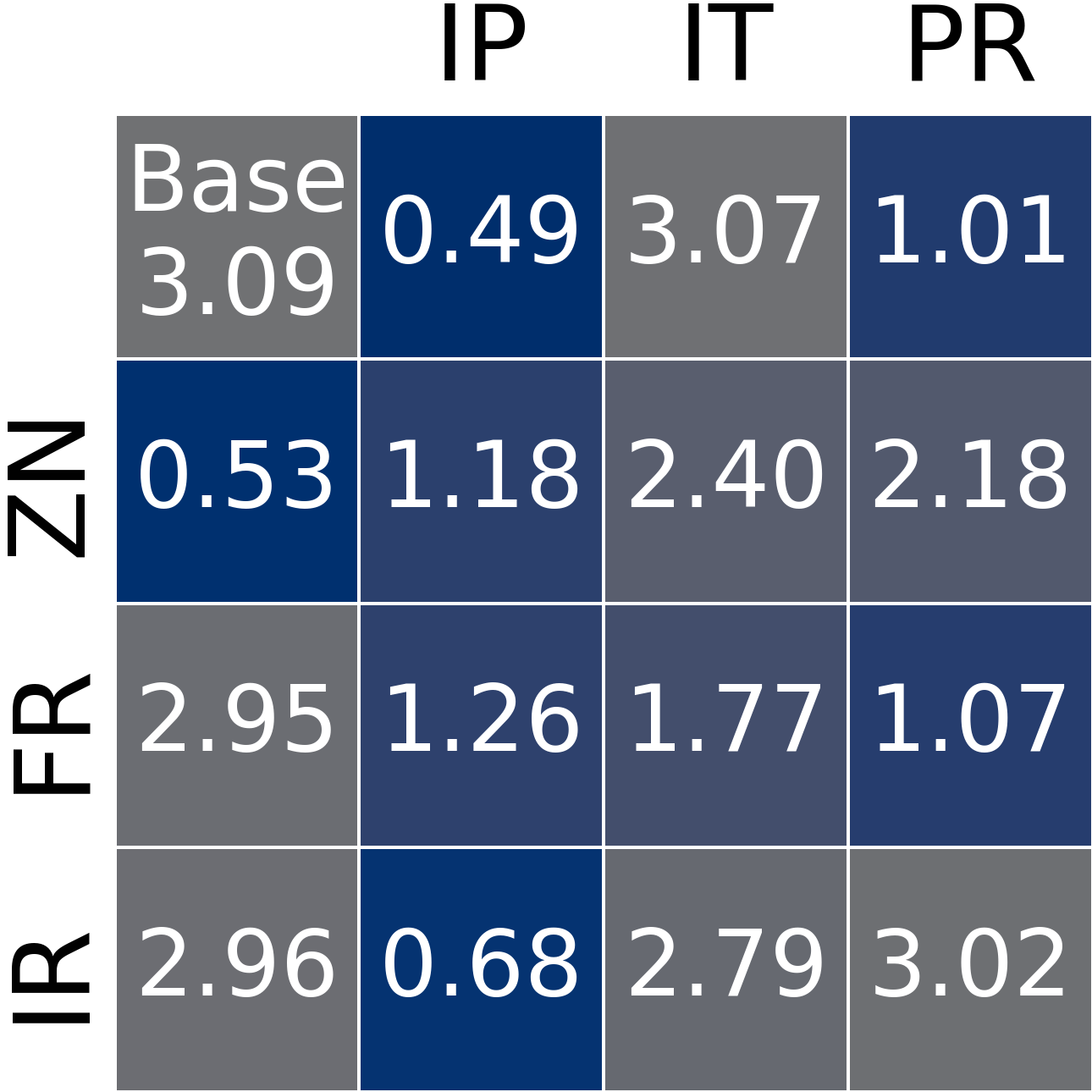

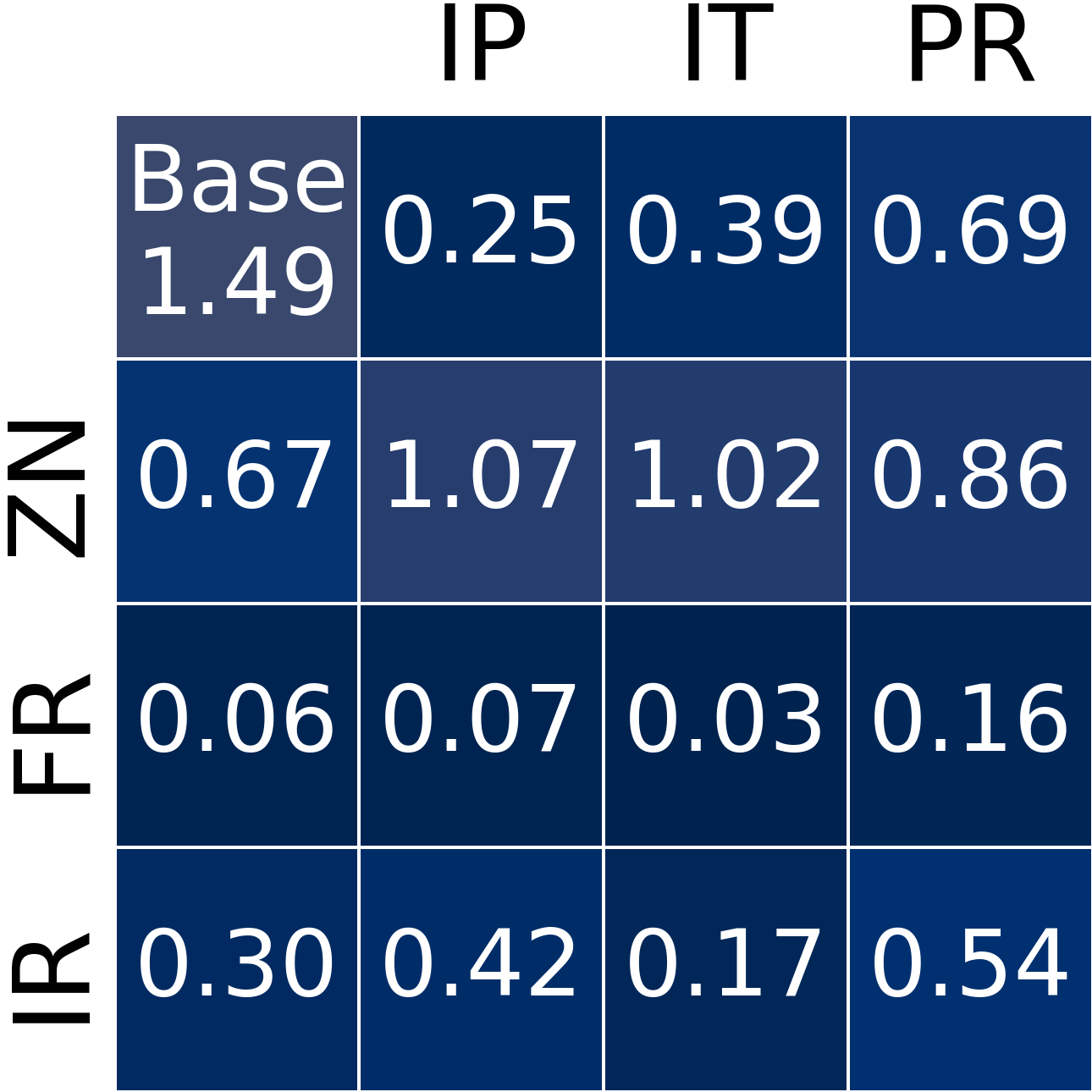

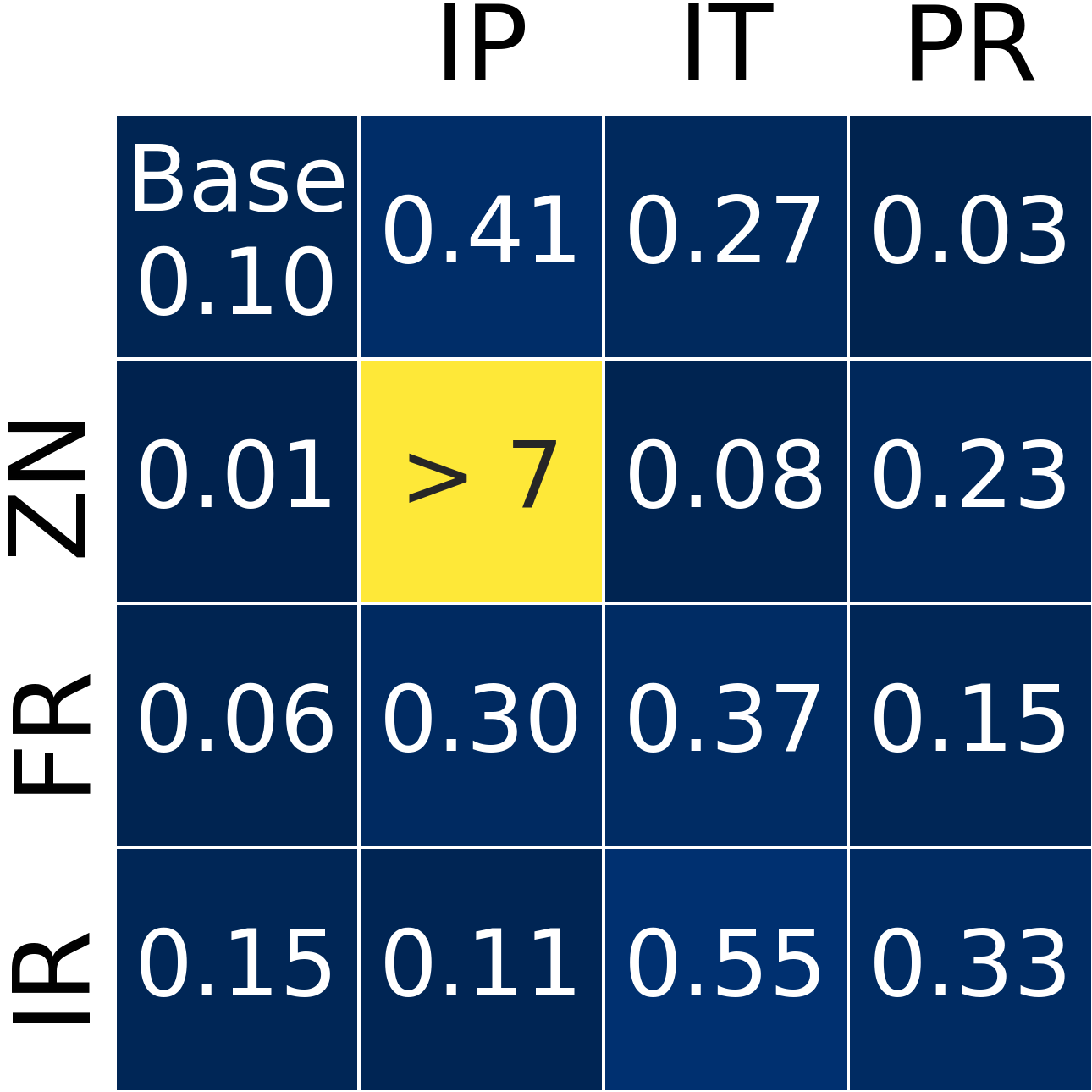

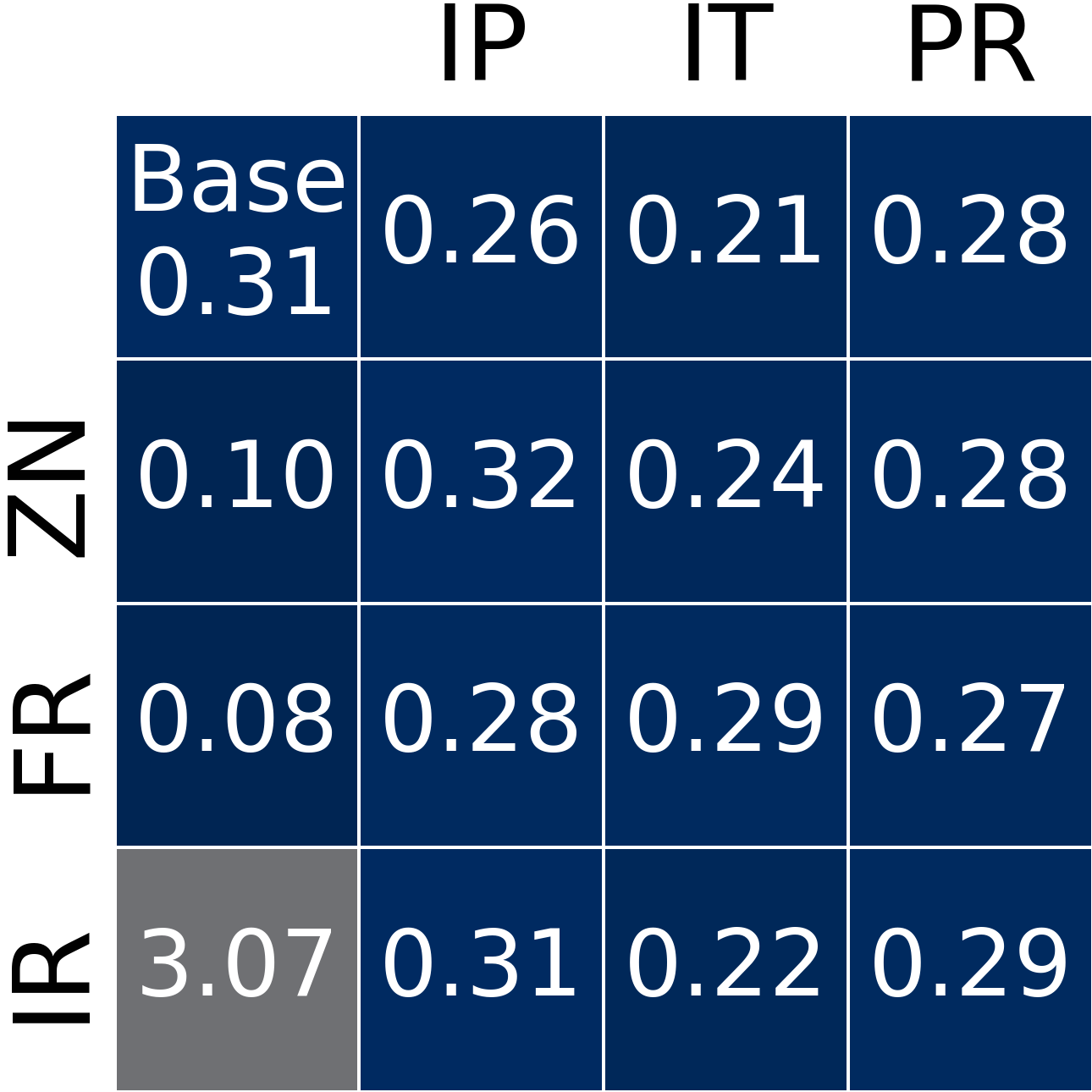

Figure 1: Pre-analysis of policies overlap across datasets and GNN models before applying fair augmentation.

Main Results

Our experiments reveal nuanced findings about fairness-aware augmentation strategies across different settings.

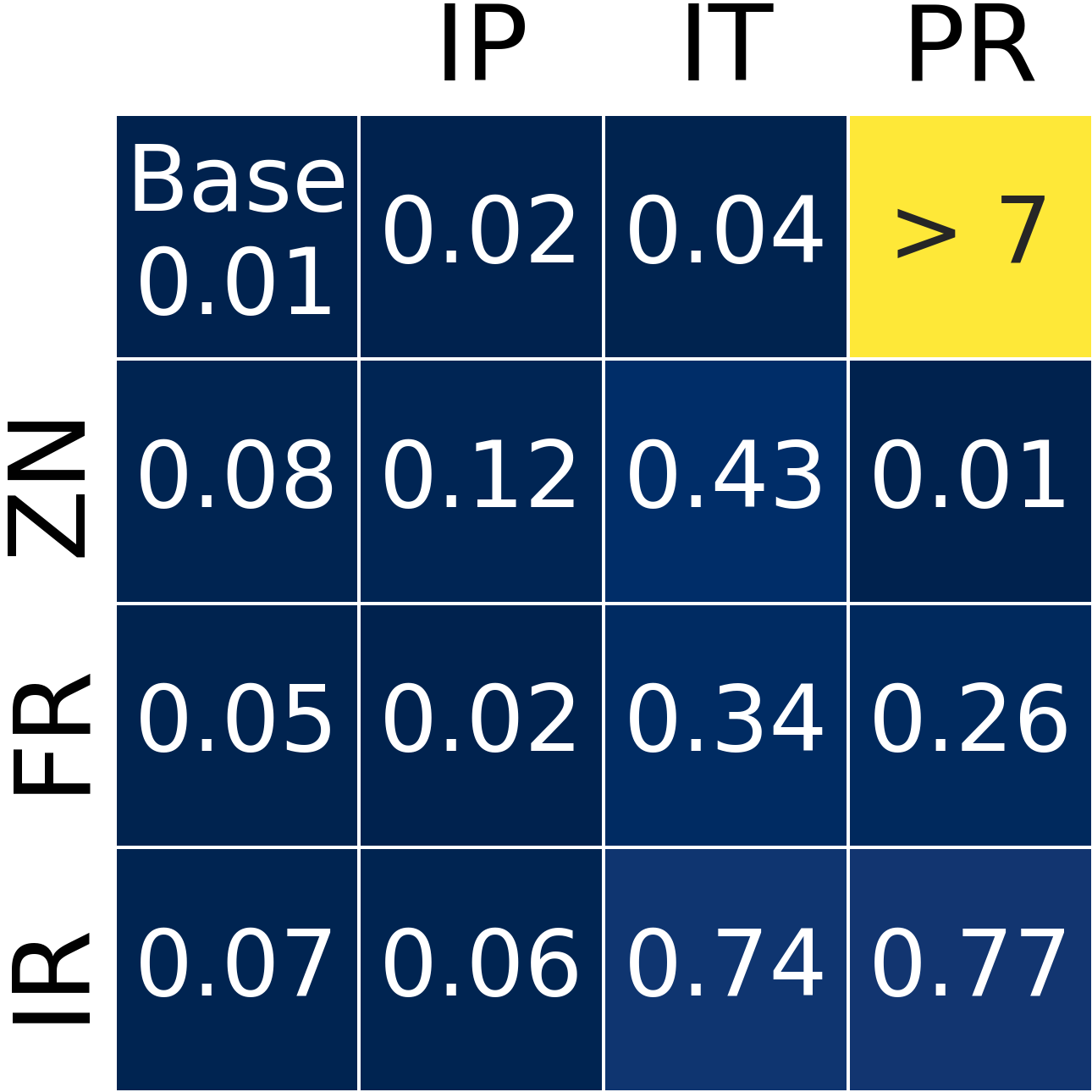

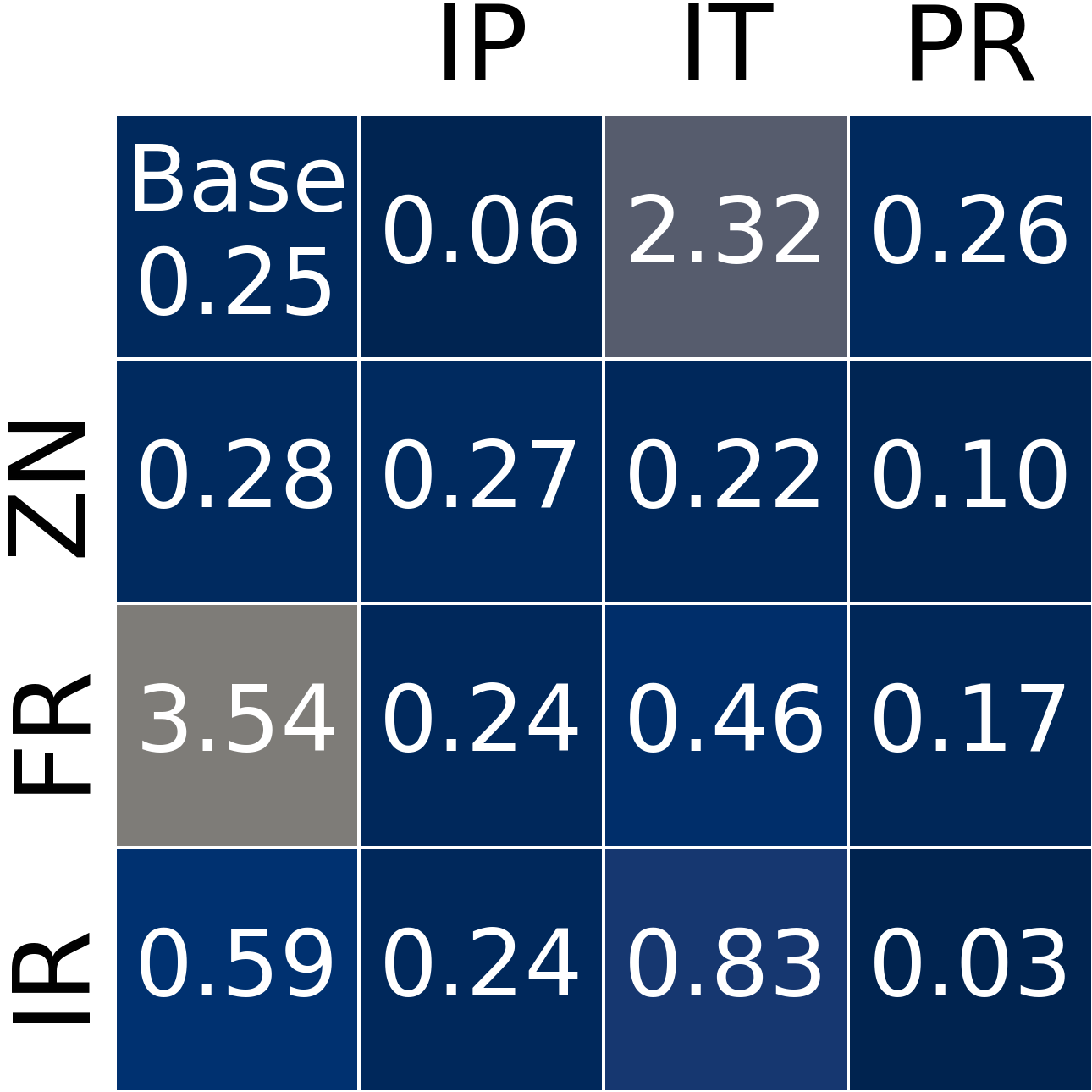

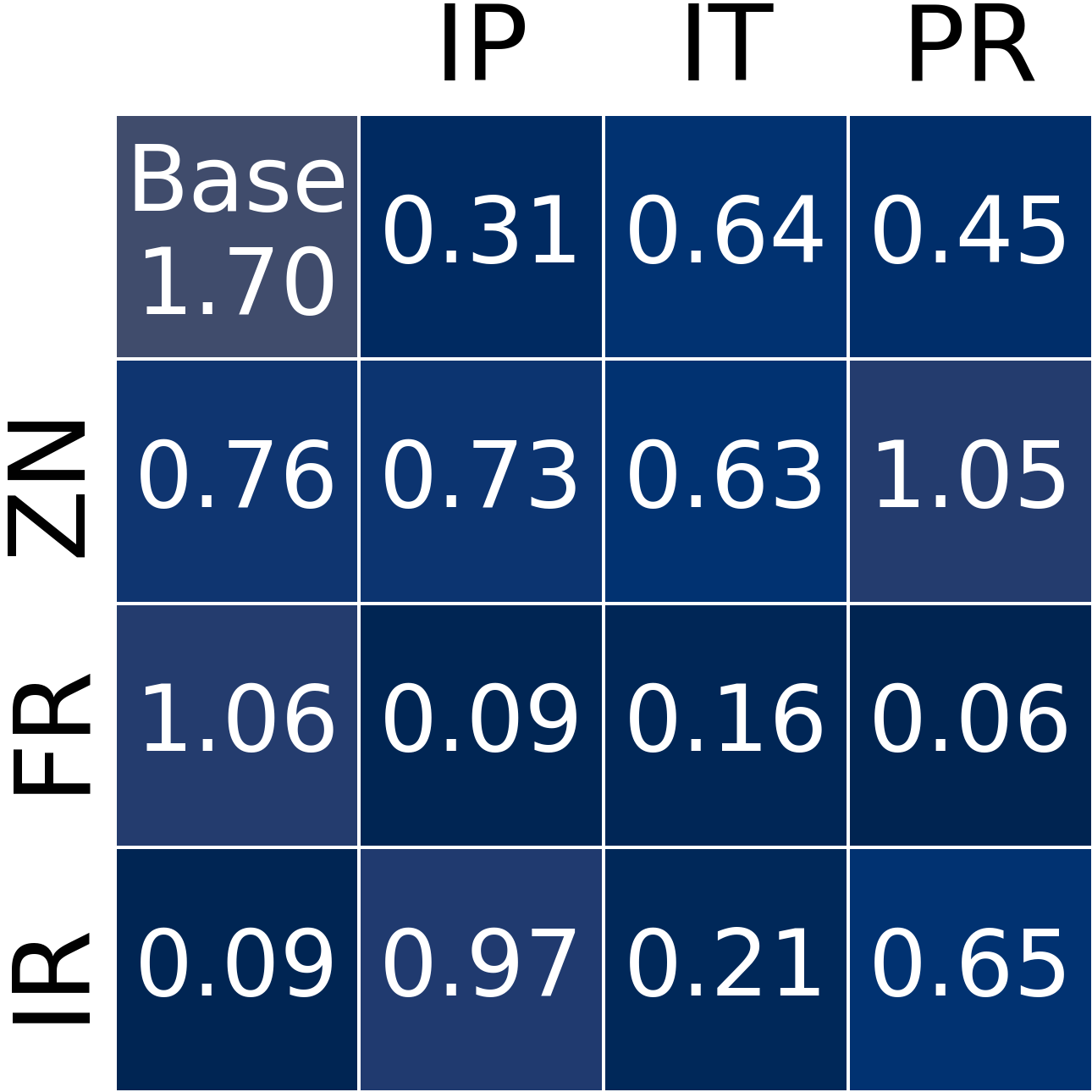

RQ2: Comparison across Sampling Policies

Given that the mitigation procedure successfully mitigated unfairness on HMLET and LightGCN under almost all datasets, we compare the mitigation level of each sampling policy under these models. Larger datasets (LF1M, ML1M) benefit more from the mitigation procedure compared with smaller corpora (FNYC, FTKY). The algorithm is especially effective under ML1M, where Δ was reduced in all settings, regardless of the sampling type.

Figure 2: Comparison among sampling policies in terms of unfairness mitigation (Δ percentage) across gender groups for LightGCN (top row) and HMLET (bottom row). The first column (row) of each subplot pertains to User-sampling (Item-sampling) settings. Darker cells indicate lower unfairness (better).

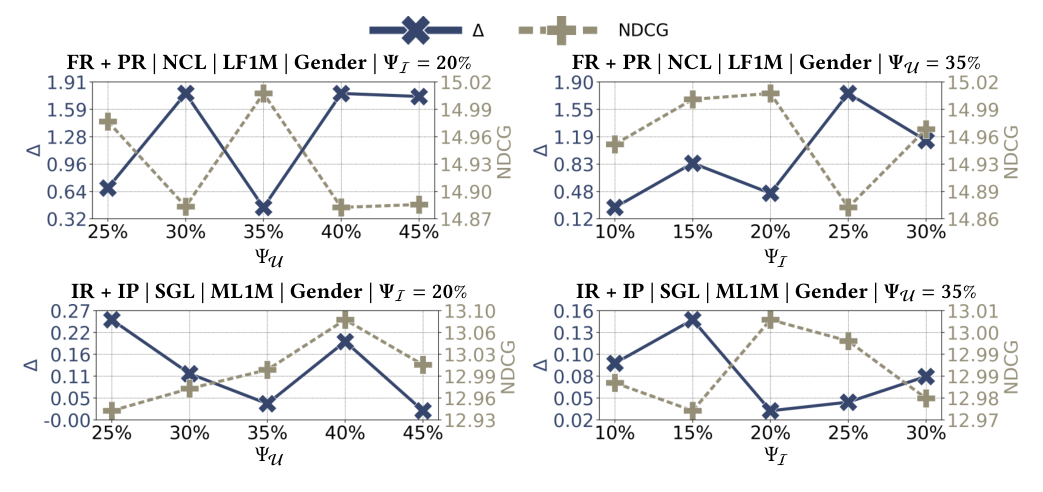

RQ3: Impact of Ψ on Sampling Policies

We analyze the impact of ΨU and ΨI parameters on the resulting utility and fairness levels. The combination ⟨ΨU = 35%, ΨI = 20%⟩ outlines a good trade-off across several settings. The subplots delineate a consistent trend where reducing Δ causes NDCG to increase, and vice versa — this trend of inverse proportion is especially prominent when ΨI varies.

Main Findings

- Effectiveness on high-utility models: Fair graph augmentation is consistently effective on high-utility GNN models

- Dataset size matters: The method works better on large datasets

- Transferability challenges: Experiments on the transferability of fair augmented graphs reveal new issues for future research

- Model-specific effects: The effectiveness varies across different GNN architectures

BibTeX

@inproceedings{boratto2024fair,

author = {Boratto, Ludovico and Fabbri, Francesco and Fenu, Gianni and Marras, Mirko and Medda, Giacomo},

title = {Fair Augmentation for Graph Collaborative Filtering},

booktitle = {Proceedings of the 18th ACM Conference on Recommender Systems},

series = {RecSys '24},

year = {2024},

publisher = {Association for Computing Machinery},

doi = {10.1145/3640457.3688064}

}