Abstract

In recommendation literature, explainability and fairness are becoming two prominent perspectives to consider. However, prior works have mostly addressed them separately, for instance by explaining to consumers why a certain item was recommended or mitigating disparate impacts in recommendation utility. None of them has leveraged explainability techniques to inform unfairness mitigation. In this paper, we propose an approach that relies on counterfactual explanations to augment the set of user-item interactions, such that using them while inferring recommendations leads to fairer outcomes. Modeling user-item interactions as a bipartite graph, our approach augments the latter by identifying new user-item edges that not only can explain the original unfairness by design, but can also mitigate it. Experiments on two public data sets show that our approach effectively leads to a better trade-off between fairness and recommendation utility compared with state-of-the-art mitigation procedures. We further analyze the characteristics of added edges to highlight key unfairness patterns.

Motivation

Current research in recommender systems is increasingly focusing on beyond-utility perspectives, such as explainability and fairness. However, these perspectives are usually considered separately:

- Explainability research has focused on justifying why a certain item was recommended

- Fairness mitigation methods rely on mathematical formulations but are rarely informed by explanatory analyses

Key Insight: We propose the first approach that leverages explainability techniques to inform unfairness mitigation, using counterfactual explanations to identify and add user-item interactions that lead to fairer recommendations.

Method Overview

Our approach augments a user-item interactions graph to counteract consumer unfairness across demographic groups. The key idea is to hypothesize a counterfactual world where disadvantaged users benefit from new edges to improve their recommendation utility.

Augmentation Mechanism

We generate an augmented adjacency matrix à by adding edges to the original matrix A through a learned parameter vector. The augmentation is guided by a two-term loss function:

- Lfair: Quantifies fairness using demographic parity, measuring the disparity in NDCG between demographic groups

- Ldist: Controls the distance between original and augmented graphs to ensure minimal perturbation

Sampling Policies

To narrow the set of candidate edges, we apply several sampling policies:

| Policy | Type | Description |

|---|---|---|

| BM | Base | Base algorithm with no sampling applied |

| ZN | User | Users with no relevant items in top-k (NDCG@k = 0) |

| LD | User | Users with fewest interactions (low degree) |

| SP | User | Users interacting mostly with niche items (sparse) |

| FR | User | Users furthest from advantaged group in graph distance |

| IP | Item | Items most preferred by the disadvantaged group |

Experimental Setup

Datasets

| Dataset | Attributes | Advantaged Groups | Domain |

|---|---|---|---|

| MovieLens 1M | Gender, Age | Males (71.7%), Younger (56.6%) | Movies |

| Last.FM 1K | Gender, Age | Females (42.2%), Older (42.2%) | Music |

GNN-based Models

- GCMC: Graph Convolutional Matrix Completion — reconstructs user-item relevance via encoder-decoder architecture

- NGCF: Neural Graph Collaborative Filtering — propagates embeddings using high-order connectivities

- LightGCN: Lightweight GCN with only neighborhood aggregation

Key Results

Mitigation Performance

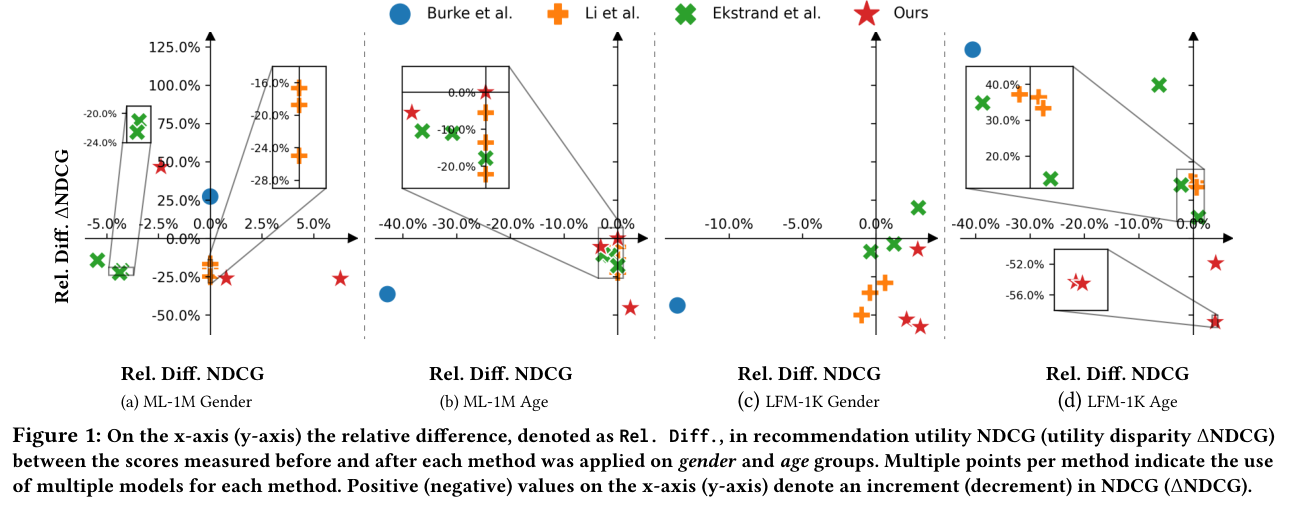

Main Finding: Our approach systematically achieves the best mitigation performance (lowest ΔNDCG) while maintaining or improving recommendation utility across most settings.

- Unlike other methods that decrease utility, our algorithm reports positive or negligible impact on recommendation performance

- The ZN+IP policy combination achieves -100% unfairness reduction for NGCF on ML-1M gender groups

- Adding interactions to users with zero NDCG (ZN policy) consistently improves their recommendation utility

Policy Analysis Insights

- Some policies work consistently regardless of model (data-level bias)

- Other policies only work for specific models (model-dependent bias)

- The augmentation has negligible effect on advantaged group utility — focusing solely on improving the disadvantaged group

Conclusions

We proposed an augmentation method that leverages explanation techniques to mitigate consumer unfairness in GNN-based recommender systems. Our experiments show:

- More reliable unfairness mitigation than state-of-the-art algorithms

- Ability to increase overall recommendation utility

- Confining the algorithm to disadvantaged users with no relevant recommendations positively affects their utility

Limitations: The augmentation had limited impact on deep GNNs (GCMC, NGCF) due to the diminished influence of the graph in the prediction process. Future work will explore new policies, objective functions, and adoption to non-GNN models.

BibTeX

@inproceedings{boratto2023counterfactual,

author = {Boratto, Ludovico and Fabbri, Francesco and Fenu, Gianni and Marras, Mirko and Medda, Giacomo},

title = {Counterfactual Graph Augmentation for Consumer Unfairness Mitigation in Recommender Systems},

booktitle = {Proceedings of the 32nd ACM International Conference on Information and Knowledge Management},

pages = {3753--3757},

year = {2023},

publisher = {ACM},

doi = {10.1145/3583780.3615165}

}